News

- Apr 23, 2021

The journal paper from the scooter team in our lab is accepted to Paladyn, Journal of Behavioral Robotics!

Contents

Abstract

The design of user interfaces for assistive robot systems can be improved through the use of a set of design guidelines presented in this paper. As an example use, the paper presents two different user interface designs for an assistive manipulation robot system. We explore the design considerations from these two contrasting user interfaces. The first is referred to as the graphical user interface, which the user operates entirely through a touchscreen as a representation of the state of the art. The second is a type of novel user interface referred to as the tangible user interface. The tangible user interface makes use of devices in the real world, such as laser pointers and a projector-camera system that enables augmented reality. Each of these interfaces is designed to allow the system to be operated by an untrained user in an open environment such as a grocery store. Our goal is for these guidelines to aid researchers in the design of human-robot interaction for assistive robot systems, particularly when designing multiple interaction methods for direct comparison.

1 Introduction

Many different types of user interfaces (UIs) exist for assistive robots that are designed to help people with disabilities with activities of daily living (ADLs) while maintaining their sense of independence. For example, Graf, Hans, and Chaf present a touchscreen interface for object selection with an assistive robot manipulator [1]. For direct interaction with the world, Kemp, Anderson, Nguyen, Trevor, and Xu present a user interface that uses a laser interface to select objects and Gelšvartas, Simutis, and Maskeliūnas present a projection mapping system that can be used to highlight specific objects in the real world for selection [2, 3].

For our research on the development of different types of user interfaces for assistive robotics, we have had to solve the problem of directly comparing the usability of user interface designs despite the modes of interaction between the user and the system being entirely different. How can a direct comparison be made between radically different access methods? Without the ability for a direct comparison, how can we learn the best methods for designing human-robot interaction (HRI) for assistive robot systems?

In this paper, we present a framework for designing user interfaces for assistive robots that ensures that any two user interfaces are directly comparable. We discuss a set of core design principles that we have identified as being important for user interfaces for assistive robots, and the importance of guaranteeing that the underlying process for operating the system is consistent through the use of a state diagram that describes the loop of interactions between human and robot. Finally, we discuss the implementation of these design guidelines for two user interfaces we have designed for the assistive robotic scooter system presented in [4].

2 Related work

When designing our framework, we researched different examples of assistive robotics and their user interfaces (UI) in order to determine the design guidelines for our framework. A few notable assistive robot systems are Martens, Ruchel, Lang, Ivlev, and Graser’s semi-autonomous robotic wheelchair [5 ], Grice and Kemp’s teleoperated PR2 controlled through a web browser [6], Achic, Montero, Penaloza, and Cuellar’s hybrid brain-computer interface for assistive wheelchairs [7], and Nicholsen’s assistive robot Bestic, designed to help those with motor disabilities [8].

In regards to user interface designs, we examined UIs that utilized laser pointing devices and touchscreens along with general pick-and-place interfaces to reference when designing our two test UIs. Some notable touchscreen interfaces include Graf, Hans, and Chaf’s touchscreen interface for object selection for general home assistance robots [1 ], Cofre, Moraga, Rusu, Mercado, Instroza, and Jemenez’s smart home interface designed for people with motor disabilities [9], and Choi, Anderson, Glass, and Kemp’s object selection touchscreen interface designed for amyotrophic lateral sclerosis (ALS) patients [10]. Notable UI’s that implement a laser pointing device include Kemp, Anderson, Nguyen, Trevor, and Xu’s interface that uses a laser pointer to select items [2], Gualtieri, Kuczynski, Shultz, Ten Pas, Platt, and Yanco’s laser pointer selection interfaces for robotic scooter systems [11], and again Choi, Anderson, Glass, and Kemp’s interface for ALS patients suing an ear-mounted laser pointer [10]. In the pick-and-place interface area, Quintero, Tatsambon, Gridset, and Jägersand’s UI uses gestures to control the arm [12 ], Rouhollahi, Azmoun, and Masouleh’s UI was written using the Qt toolkit [13], and Chacko and Kapila’s Augmented Reality based interface used smartphones as input devices [14].

Expanding upon Gualtieri, Kuczynski, Shultz, Ten Pas, Platt, and Yanco’s previously mentioned laser pointer selection interface [11], Wang et al.presented an interface comprised of a laser pointer, a screen, and a projector-camera system that enables augmented reality [4 ]. This interface informed the user of the location of a pick and place procedure by projecting light with a projector. The system used to demonstrate our framework in this paper expands upon Wang’s improvements.

3 Guidelines for User Interface Development and Evaluation for Assistive Robotics (GUIDE-AR)

In order to create user interfaces that are generally easy to use for people with disabilities and to be able to directly compare different user interfaces, we created Guidelines for User Interface Development and Evaluation for Assistive Robotics (GUIDE-AR). GUIDE-AR’s design principles are those that we deem to be essential for user interfaces intended for assistive robots. Along with these principles, GUIDE-AR includes the use of a state diagram that represents the process of interactions between the user and the system. This state diagram allows for the direct comparison of different types of user interfaces and access methods by representing the human-robot interaction to be represented in a procedural manner. This state diagram allows us to to streamline the process of comparing interfaces while also leading to the ability to eliminate unnecessary complexity through the examination of the steps in the HRI process.

3.1 Design Principles

When envisioning the design principles for user interfaces for assistive technology, our high level goal was the development of a system that would be usable by a novel user in an open environment. We have defined our design principles based upon this goal; the UI of such a system should be:

- Communicative. Because we believe systems should be designed for an untrained user, the UI must offer the user enough feedback so that a novel user can operate the system with little to no instruction. The UI should clearly communicate its current state so that it is never unclear what the system is doing. For systems that are expected to be used long term, allowing the user to select the amount of communication provided by the system will increase its usability.

- Reliable. The real world can be messy and unpredictable, so it is unavoidable that the user or the system might make errors. To mitigate this problem, the system should have robust error handling in the case of either a system failure (i.e., the robot fails to plan a grasp to the selected object), or a user error (i.e., the user selects the wrong object)

- Accessible. The system should be accessible to people with a wide variety of different ability levels, independently of their physical or cognitive abilities, or level of experience with robots. This requirement for accessibility includes the need to design the UI to have the ability to be adapted to a wide variety of access methods (e.g., switches, sip and puff, joysticks) that are utilized for controlling powered wheelchairs and for using computers.

3.2 Describing the Human-Robot Loop

The interaction process between the user and the robot system can be represented with a state diagram. As an example, Figure 1 shows the state machine for an elevator, where labels on the nodes represent states and labels on the edges represent changes in state as a result of user action. The process of creating this state diagram can allow for the process to be streamlined, if redundancies or inefficiencies are discovered.

Additionally, when we design different UIs that each conform to the same state diagram for the interaction process, we can enforce that they are directly comparable when researching their performance. In this case, the only independent variable in the comparison will be the mode of interaction with the user interface. For the elevator example, this type of UI design would mean that you could directly evaluate the performance of a less conventional assistive interface (i.e., voice controls, gestures, etc.) against the traditional button-based UI.

States

| Summon | The elevator is travelling to the requested floor |

| Close | The elevator doors are closed |

| Open | The elevator doors are open |

| Travel | The elevator is travelling to the requested floor |

4 Example Use of GUIDE-AR

As an example use of the GUIDE-AR framework, we present the implementation of two user interfaces for an assistive robotic manipulator system mounted to a mobility scooter that we have developed in our previous work [4]. The system consists of a mobility scooter with a robotic manipulator that is capable of picking and placing objects. The intended use case is for people with limited mobility to be able to use the robot arm to pick up arbitrary items from an open environment such as a grocery store with little to no training.

We have developed two user interfaces for this system: the graphical user interface (GUI), which allows the user to interact with the system entirely through a touchscreen, and the tangible user interface (TUI), which makes use of devices in the real world, such as buttons, laser pointers, an a projector-camera system that enables augmented reality.

4.1 Assistive Robot System Overview

The assistive robot manipulator system consists of a Universal Robots UR5 robotic arm equipped with a Robotiq 2F-85 electric parallel gripper, mounted on a Merits Pioneer 10 mobility scooter, as shown in Figure 2. Five Occipital Structure depth cameras provide perception for the system. A workstation is mounted on the rear of the scooter and is connected to the robot, sensors, and the hardware for the two UIs, the Graphical User Interface (GUI) and Tangible User Interface (TUI). The workstation has an Intel Core i7-9700K CPU, 32 GB of memory, and an Nvidia GTX 1080 Ti GPU. The GUI uses a 10-inch touchscreen mounted on one of the armrest in reach of the user. The TUI consists of a box mounted on one of the armrests with six dimmable buttons and a joystick, along with a laser pointing device mounted to the end effector for object selection, and a projector aimed towards the workspace of the arm that enables the system to highlight objects in the world when combined with the depth cameras.

Each subsystem of the software is implemented as a ROS node running on the workstation. The system uses GPD for grasp pose detection, and OpenRAVE and TrajOpt for collision avoidance and motion planning [15–17].

4.2 HRI State Diagram

The desired use of our system is picking items in an open environment such as a grocery store with little to no training. The state machine we have designed allows the user to select an object, pick it automatically, and then decide between dropping it in a basket mounted to the scooter or placing it in a different location in the world.

Figure 3 is a state diagram that uses actions and resultant states to represent the task being performed by the robot, independent of the user interface being utilized. The labels on the nodes represent the state of the robot, and the labels on the edges represent the option selected on the touchscreen, the button pressed, or other action taken by the user.

States

| Drive | Arm is stowed and the user is free to drive the scooter |

| Selection | The system is waiting for the user to select an object |

| Confirmation | The user must confirm that the displayed object is the intended object |

| Pick | Robot picks object |

| Basket | Robot drops object in basket |

| Drive w/ Obj | Arm is stowed with the object held and the user is free to drive the scooter |

| Place Selection | The user must select a location to place the object |

| Place Confirmation | The user must confirm that the displayed location is correct |

| Place | Robot drops object in selected location |

4.3 Design for Different Interaction Methods

| Graphical | Tangible | |

| Communicative | Descriptive text prompts. Only actions that can be taken from the current state are shown to the user. | Descriptive voice prompts. Buttons light up to indicate what actions can be taken from the current state. Eliminates the need for context switch between UI and world. |

| Reliable | User must confirm that the correct object is selected. Error handling for grasp failure. | User must confirm that the correct object is selected. Error handling for grasp failure. |

| Accessible | On-screen buttons are large and clearly labelled. Position of touchscreen is adjustable. | Laser controlled with joystick instead of handheld, reducing the amount of fine motor skills needed. Position of button box is adjustable. |

Table 1. Description of how both user interfaces comply with our design principles

As previously mentioned, we have created two different UIs to operate the system, the graphical user interface (GUI) and tangible user interface (TUI). The GUI was designed with the intent to keep the user’s focus on a touch screen using an application. In constrast, the TUI has been designed to keep the user’s focus on the world using a set of buttons and augmented reality. Both UIs comply with the design principles we have outlined, as shown in table 1

4.3.1 Touchscreen-based GUI

We consider the GUI to be representative of the state of the art. We designed it to be a suitable control for making comparisons against, under the assumption that no matter how the GUI is implemented, it still requires the user to switch focus from the real world to the virtual representation of the world. This context switch occurs for any type of UI that is based primarily on a screen that provides a representation of the world. We believe that this presents an additional cognitive load on the user, so we designed the GUI as a baseline to study this effect.

4.3.1.1 User Experience of the GUI At the start of this project, the assistive manipulation scooter had a single interface, which used a screen, an external four button pad, and a laser pointing device to operate the system. It was also designed to be used by the researchers and designers of the system. Thus extraneous information about grasping methods and the like would be displayed or prompted while operating the system.

We decided to consolidate the user’s area of interaction and the number of steps needed to operate the system to a minimum to lower its cognitive load on the user. This led to the design of the GUI, which allows the user to operate the system entirely through a touch screen. This new GUI removed the need for a laser pointing device and use of the button pad. Selection of objects and operating the pick-and-place features are now all done through one application on a touch screen.

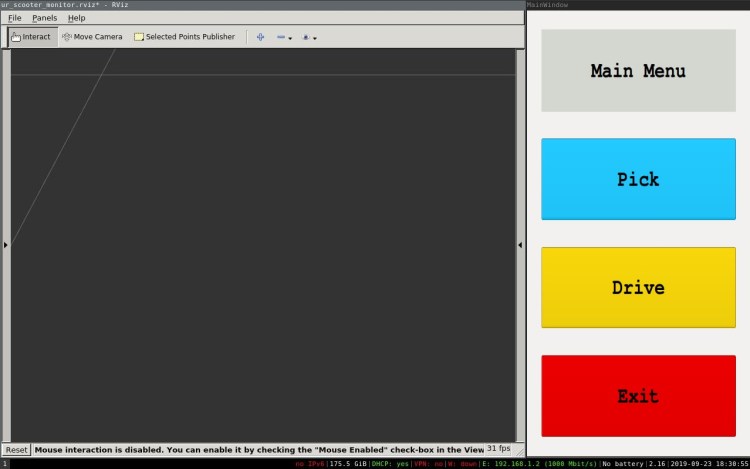

When we were designing the GUI, we wanted it to be accessible to as large of a population as possible. So we took color, cognitive issues, and fine motor issues into consideration for usability. There are at most three buttons on the screen at one time, each colored blue, yellow, or red, as seen in Figure 5. These particular colors were chosen since red, yellow, and blue are less likely to be confused by those with red-green colorblindness, which is the most common form of colorblindness according to the National Eye Institute [18]. We also made the buttons large to account for those with limited fine motor ability so that they would be easily selectable.

The GUI includes a tap to zoom feature, which also assists in making the GUI easy to use for people with motor disabilities by allowing the user’s selection area to be smaller (i.e., not requiring large movements of a person’s hand or arm) and making the items to be selected larger (i.e., not requiring fine motor control in order to be able to select a small region on the touchscreen). This accessible design makes it easier for the user to select the desired object.

As mentioned in the system overview, the touch screen is placed along the armrest on side of the user’s dominant hand so that it is in the easiest place for the user to access. There are other options that could be considered for accessibility, such as creating a key guard for the screen that provides guidance to the regions of the screen that are supposed to be touched.

4.3.1.2 Building the GUI To build the GUI, we implemented an RViz window to show the world to the user with a custom made Qt application to operate the pick-and-place system. RViz shows what the five depth sensors are publishing via ROS and displays it to the user as a colorized PointCloud2. To select an object or placement location, the user can tap on the screen to zoom in on the desired object for selection or the desired location’s general area for placing an object, then select it with a second tap. The rest of the process, including confirming objects, choosing placement options, prompts and the like, is in a custom made Qt application. Qt is a commonly used GUI toolkit that runs with C++ or Python, and as the brunt of the system runs on Python, we used Qt to implement the GUI. The final product of the Qt application, tap to zoom and item selection can be seen in Figures 6 and 7.

As previously mentioned, the representation of the world shown to the user (as shown in Figure 6) is stitched from the five depth cameras that are also used for grasp planning. These sensors do not have integrated RGB cameras, so another RGB camera pointed towards the workspace is used to color the pointcloud. First, the RGB image is registered to each of the depth images given the pose of RGB camera and the depth cameras. Then, an array of points with fields for the position and color is created from the registered depth and RGB images. This array is then published as a ROS PointCloud2 message so that it can be visualized in RViz.

In this figure and in Figure 7, there is a bug in the coloring of the pointcloud that is seen drawing another projection of the table on the floor. This is caused due to there only being one camera in use for the colorization algorithm, which means that for some sensors there is a large disparity between the position of the RGB camera and the depth camera. This can be fixed trivially by adding additional RGB cameras, but we have not yet been able to do this due to the COVID-19 pandemic. For a future publication, we would update this figure.

4.3.1.3 Improving the GUI We are always thinking of ways to improve the GUI as we prepare it for testing. One item is improving the colorized pointcloud. The colorized pointcloud can be improved through the addition of more RGB cameras. Since we are only using a single RGB camera to colorize the pointcloud, several of the five depth cameras are far enough away from the RGB camera that there can be duplicate projections of the objects drawn on parts of the pointcloud. This can be seen in Figure 6, where a second view of the table is projected onto the floor. This issue is caused when there is a large enough distance between the RGB camera and the depth camera that objects can occlude the RGB camera’s view of the surfaces that the depth camera is viewing. This issue can be solved by colorizing the pointcloud with multiple RGB cameras such that there is never a large distance between the RGB cameras and the depth cameras for which they are providing color data.

There are also several improvements that can be made to the design of the GUI. Currently, the GUI shows two separate windows: the RViz instance for displaying pointclouds, and the Qt application through which buttons and prompts are displayed. We would like to design the GUI so that both windows are integrated into one. This combination would allow us to hide the pointcloud window when it is not necessary, further simplifying the user experience.

4.3.2 World-based TUI

The context switch discussed previously, in which the user must switch focus between the world and the screen, can be eliminated entirely by building the UI in the world itself. We believe that this will reduce the cognitive load on the user. Towards this goal, we have designed a TUI, which uses a novel combination of technologies that allows the object selection task outlined in Figure 3 to be carried out while keeping all feedback to the user based in the real world.

4.3.2.1 Design of the TUI We designed the TUI to give the user the ability to interact directly with the world around them. The TUI utilizes a laser to select objects with a control panel mounted on the arm of the scooter itself, as shown in Figure 8, alongside multiple methods of user feedback. Feedback is provided using a projector to highlight object and locations directly in the world, as well as audio to provide status information about the system.

The control panel contains a joystick and six buttons that have LEDs within them. Each button can be lit individually. These buttons are utilized to indicate the system’s state. A button that is lit a solid color indicates the current system state, and a button that is flashing indicates to the user that they must make a choice(i.e., the yes and no buttons are flashing when asked if this is the correct object). Outside of these two conditions, the buttons remain off.

4.3.2.2 Creating the TUI The joystick and button box for the TUI is controlled via an Arduino Mega 2560 development board. This board contains an ATmega2560 processor which is the heart of the system. Communication between the microcontroller and the system is done over USB via the ROSSerial Arduino Library. This library allows you to create a ROS node on the Arduino itself, and it allows this node to communicate with the system via a serial node.

The microcontroller communicates the state of the control panel when the system is booted. It sends messages containing the state of each button and the state of the joystick to the system. The system controls the TUI operation state based upon these inputs and publishes messages containing any required updates. The microcontroller processes these messages and will update any of the external circuitry based upon its content(i.e., flash the yes and no LEDs, enable the lasers, etc.) The computer processes the state of the system and does the computationally intensive tasks. The state machine follows the principles laid out in Figure 3 and calls the proper back-end functionality to allow system operation.

An onboard voice prompting system also provides feedback to the user during their operation of the system via the TUI. These voice prompts are run on a separate thread, implemented via Google’s Text-to-Speech API. These voice prompts indicate to the user what state the system is in, and what they must do to utilize the system. They allow the user to be aware of the current state of the system. This is important for cases when the system is stitching pointclouds or computing grasps as the silence and stillness of the system may worry the user that something is wrong. With a less technically inclined user in mind, these prompts include indications such as the colors of the specific buttons to make the choices as clear as possible.

Item selection is done via the joystick contained within the control panel and a pair of lasers attached to the end effector of the UR5 (as shown in Figure 9). Once the user selects to pick up an object and the system completes its scan of the environment, the UR5 moves to a position to allow object selection with the laser. The user controls the angle of the arm via the joystick and, in turn, they are able to place both dots of the laser on whatever object they intend to pick up. The system detects the dots shown by both lasers and proceeds to isolate the object. Upon isolating the object, it is highlighted via the mounted projector to allow the user to confirm that the proper item has been selected.

A dynamic spatial augmented reality (DSAR) system is used to highlight objects and locations while operating the TUI. The projector provides feedback to the user about the grasp radius of the arm and object segmentation. Before the user selects an object with the laser, the projector will highlight all surfaces within the robot’s reach one color, and all surfaces that are outside the robot’s reach another color, as shown in Figure 11. When an object is selected, the projector will highlight the segmented object so that the user can confirm with a button that the system has identified the correct object to pick, as shown in Figure 10. When a location for a place is selected, the location of the place is highlighted as well.

4.3.2.3 Augmented Reality The DSAR system highlights objects and places in the world by projecting a visualization of the pointcloud acquired by the depth cameras. This visualization is generated by a virtual camera placed into the 3D environment along with the pointcloud. The virtual camera is then given the same intrinsics and extrinsics as the projector so that when the image from the virtual camera is projected through the projector, each point from the pointcloud is rendered over its location in the real world.

We determined the intrinsics of the projector lens by mounting the projector a fixed distance from a board orthogonal to the optical axis, projecting the image, and measuring the locations of the corners as described in our previous work [4]. Our methods for projection mapping to highlight objects in the environment are similar to those presented by Gelšvartas, Simutis, and Maskeliūnas, except that our system renders a pointcloud instead of a mesh [3 ].

Let W and H be the width and height of the image respectively, w and h be the width and height of the image in pixels respectively, X and Y be the distances from the principal point (the intersection of the optical axis and the image) to the origin (top-left) point of the image on the image plane in their respective axes, and Z be the distance from the projector to the image plane. We can determine the focal length f of the projector in pixels using the projective equation: fx = fy = w*(W/Z). The principal point (cx,cy) is then calculated in pixels: cx = w*(X/W);cy = h*(Y/H). The projector was then modeled as a pinhole camera with an intrinsic camera matrix K:

This camera matrix K is then used as the intrinsics of the virtual camera in the RViz environment so that the camera and the projector have the same intrinsics and extrinsics, allowing the projector to function as a “backwards camera” and all points in the pointcloud to be projected back onto their real-world location.

4.3.2.4 Improving the TUI We are continuing to improve aspects of the TUI, including improving the DSAR system with a smaller projector with a wider field of view, as well as adding speech-based prompts and voice recognition. The registration of the projector can be improved by projecting a graycode pattern over a chessboard and using a registered camera to compute homography. A custom PCB is also in development to allow for a smaller form factor for the control panel.

5 Discussion

We hope that GUIDE-AR can be used to aid researchers in comparing different types of user interfaces for assistive robots. In assistive robotics, adaptability is key since people with different abilities require different solutions to perform the same tasks. We hope that our guidelines for assistive robots can help researchers in evaluating the performance of user interfaces that, while very different in mode of interaction, are ultimately designed to perform the same task.

Our work to date has established a framework for researching different modes of user interaction for our system and other assistive robot systems. In order to determine the best interface design – or to learn which elements of each interface design should be combined into a new interface for controlling the scooter-mounted robot arm – we plan to conduct a user study comparing the two different types of UIs we have designed. The target population of participants to be recruited for our study are people who are at least 65 years old and who are able to get in and out of the seat on the scooter independently.

We plan to use our guidelines to help us develop novel types of UIs for the mobility scooter or other systems. Examples of possible UI designs would be implementing speech-recognition, eye tracking, or sip-and-puff control. Although our focus has been on assistive robots, we believe that the GUIDE-AR framework could also be applied to other applications of human-robot interaction, particularly when direct comparisons of interfaces are desired.

Acknowledgements

This work has been supported in part by the National Science Foundation (IIS-1426968, IIS-1427081, IIS-1724191, IIS-1724257, IIS-1763469), NASA (NNX16AC48A, NNX13AQ85G), ONR (N000141410047), Amazon through an ARA to Platt, and Google through a FRA to Platt.

References

[1] B. Graf, M. Hans, R. D. Schraft. Care-O-bot II—Development of a next generation robotic home assistant. Autonomous robots, 2004. 16(2), 193–205

[2] C. C. Kemp, C. D. Anderson, H. Nguyen, A. J. Trevor, Z. Xu. A point-and-click interface for the real world: laser designation of objects for mobile manipulation. In: 2008 3rd ACM/IEEE International Conference on Human-Robot Interaction (HRI) (IEEE, 2008) 241–248

[3] J. Gelšvartas, R. Simutis, R. Maskeliūnas. Projection Mapping User Interface for Disabled People. Journal of Healthcare Engineering, 2018

[4] D. Wang, C. Kohler, A. t. Pas, A. Wilkinson, M. Liu, H. Yanco, R. Platt. Towards Assistive Robotic Pick and Place in Open World Environments. In: Proceedings of the International Symposium on Robotics Research (ISRR) (2019) ArXiv preprint arXiv:1809.09541

[5] C. Martens, N. Ruchel, O. Lang, O. Ivlev, A. Graser. A friend for assisting handicapped people. IEEE Robotics & Automation Magazine, 2001. 8(1), 57–65

[6] P. M. Grice, C. C. Kemp. Assistive mobile manipulation: Designing for operators with motor impairments. In: RSS 2016 Workshop on Socially and Physically Assistive Robotics for Humanity (2016)

[7] F. Achic, J. Montero, C. Penaloza, F. Cuellar. Hybrid BCI system to operate an electric wheelchair and a robotic arm for navigation and manipulation tasks. In: 2016 IEEE Workshop on Advanced Robotics and Its Social Impacts (ARSO) (IEEE, 2016) 249–254

[8] N. C. M. Nickelsen. Imagining and tinkering with assistive robotics in care for the disabled. Paladyn, Journal of Behavioral Robotics, 2019. 10(1), 128–139

[9] J. P. Cofre, G. Moraga, C. Rusu, I. Mercado, R. Inostroza, C. Jimenez. Developing a Touchscreen-based Domotic Tool for Users with Motor Disabilities. In: 2012 Ninth International Conference on Information Technology – New Generations (2012) 696–701

[10] Y. S. Choi, C. D. Anderson, J. D. Glass, C. C. Kemp. Laser pointers and a touch screen: intuitive interfaces for autonomous mobile manipulation for the motor impaired. In: Proceedings of the 10th international ACM SIGACCESS conference on Computers and accessibility (ACM, 2008) 225–232

[11] M. Gualtieri, J. Kuczynski, A. M. Shultz, A. Ten Pas, R. Platt, H. Yanco. Open world assistive grasping using laser selection. In: 2017 IEEE International Conference on Robotics and Automation (ICRA) (IEEE, 2017) 4052–4057

[12] C. P. Quintero, R. Tatsambon, M. Gridseth, M. Jägersand. Visual pointing gestures for bi-directional human robot interaction in a pick-and-place task. In: 2015 24th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN) (2015) 349–354

[13] A. Rouhollahi, M. Azmoun, M. T. Masouleh. Experimental study on the visual servoing of a 4-DOF parallel robot for pick-and-place purpose. In: 2018 6th Iranian Joint Congress on Fuzzy and Intelligent Systems (CFIS) (2018) 27–30

[14] S. M. Chacko, V. Kapila. An Augmented Reality Interface for Human-Robot Interaction in Unconstrained Environments. In: 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (2019) 3222–3228

[15] M. Quigley, K. Conley, B. Gerkey, J. Faust, T. Foote, J. Leibs, R. Wheeler, A. Ng. ROS: an open-source Robot Operating System. volume 3 (2009)

[16] A. ten Pas, M. Gualtieri, K. Saenko, R. Platt. Grasp Pose Detection in Point Clouds. The International Journal of Robotics Research, 2017. 36(13-14), 1455–1473. URL https://doi.org/10.1177/0278364917735594

[17] M. Gualtieri, A. ten Pas, K. Saenko, R. Platt. High precision grasp pose detection in dense clutter. In: 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (2016) 598–605

[18] N. E. Institute. Types of Color Blindness. 2019